About

Versatile AI technologist passionate about transforming real-world challenges into intelligent, scalable solutions through data, AI automation, and machine learning. Adept at bridging business goals with technical implementation to drive innovation and value across industries. An AI Architect specializing in building end-to-end AI systems powered by large language models (LLMs), retrieval-augmented generation (RAG), and cloud-native data platforms. Hands-on experience with tools like Azure OpenAI, AWS Bedrock, LangGraph, LangChain, Semantic Kernel, CrewAI and AI Automation tools like Copilot Studion, Make and N8N to deliver intelligent search, document understanding, and conversational AI solutions.

Bringing deep cross-domain experience in: 🔹 Infrastructure and Engineering – GenAI solution, automate document understanding intelligent chat-based interactions, integrated OCR, summarization, RAG pipelines. 🔹 Oil & Gas – cloud-native lakehouses, real-time analytics, threat detection. 🔹 Retail & E-commerce – intelligent search, summarization, customer-facing bots. 🔹 Capital Markets – risk systems modernization, secure enterprise data solutions. 🔹 Asset Management – large-scale data systems, SSO/LDAP integration.

- Mobile: +44 7553475418

- Country: United Kingdom

- Degree: MSc Data Science and Computational Intelligence

- Email: ramanath.perumal@gmail.com

AI POCs Experience & Projects

AI Architect/Engineer

Apr'25 - Present

Clients (UK & US)

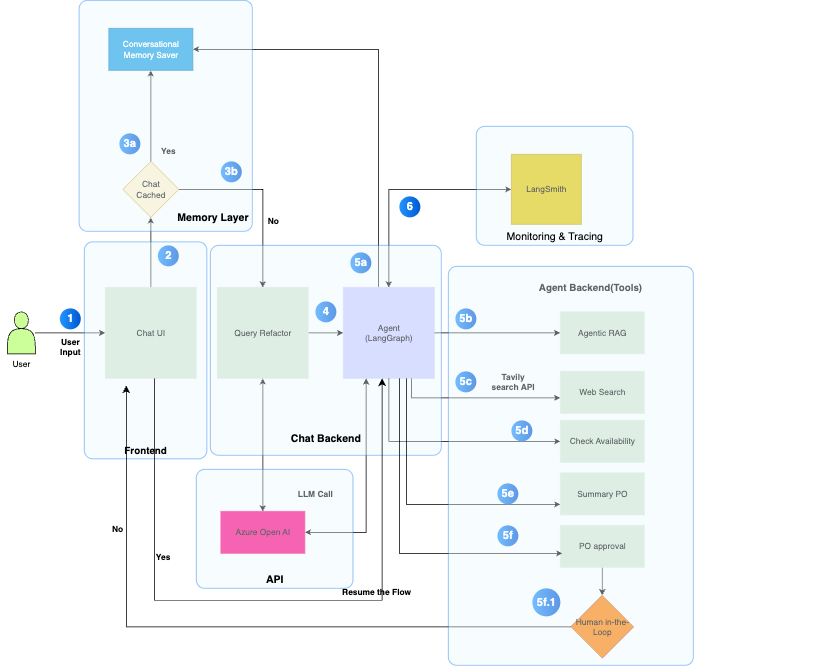

PO Agentic AI — Agentic Procurement Assistant (LangGraph + FastAPI + HITL + RAG + Tools + AWS Bedrock + Azure OpenAI + LangSmith)

- A production-ready backend for an AI procurement assistant that can check inventory, look up policy rules, search the web for pricing, create purchase orders with human-in-the-loop approval, and summarize daily PO activity.

- Integrated a Policy guidelines tool for Extraction, Embedding and Indexing that uses a RAG-powered, Azure FunctionApp to parse organization policy rules.

- Developed a QueryRefinerPlugin using AWS Bedrock model to clean, rephrase, and restructure user queries, improving downstream plugin accuracy and LLM understanding.

- Developed a Purchase Order approval tool with stock availability, poliy rules and validate using HITL approval for new/exsisting product and resume the Langgraph nodes for further steps.

- Integrated external web search capabilities via the Tavily API to fetch real-time material price related to users Product request.

- Instrumented LangSmith observability to trace token usage, model latency, and Azure OpenAI models cost metrics across tools calls and agent executions.

- Orchestrated agent work flow that chains refine → search → approve(HITL) → search operations, returning structured responses.

- Developed a FastAPI endpoint (/api/agntchat) to serve frontend interfaces like React Js, enabling real-time interaction with the assistant.

Technical Stack

Tools and Technologies

AI Consultant/Engineer

Jan'24 - Mar'25

Clients (Europe & US)

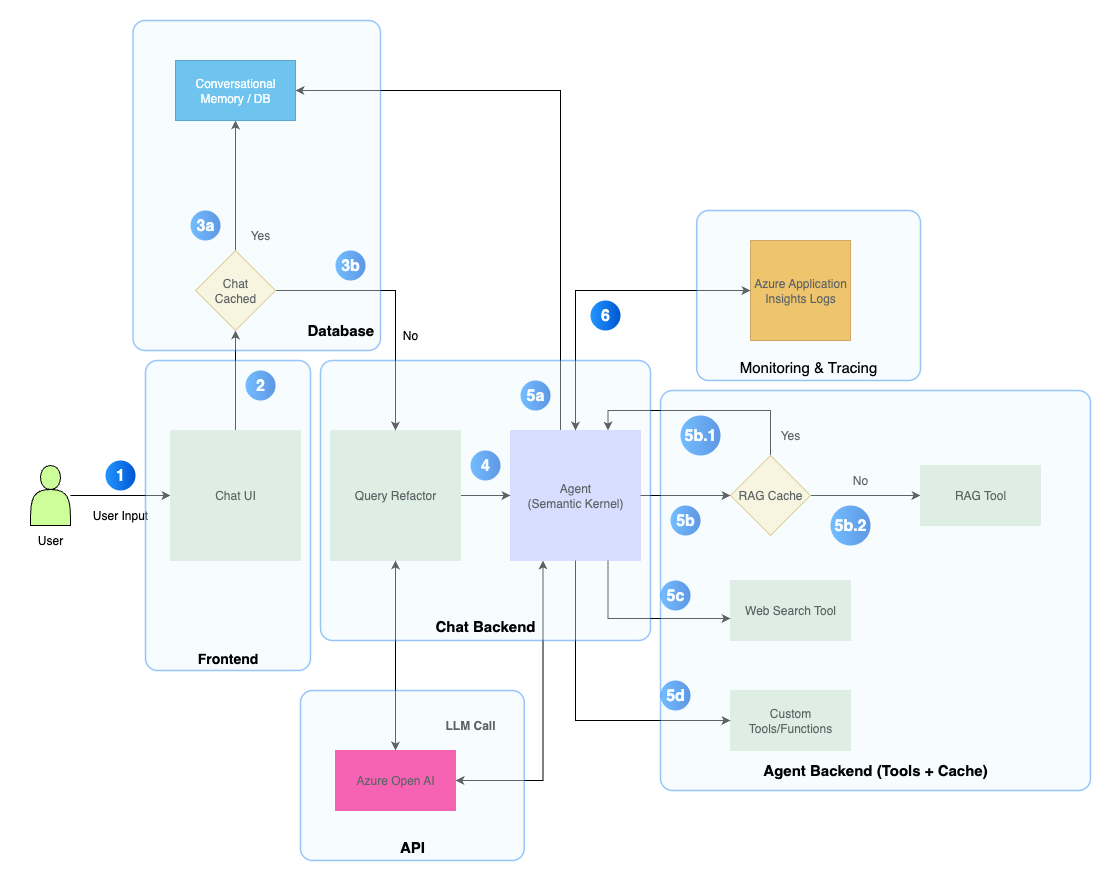

Skill AI Agent - Multi step Agent (Semantic Kernel + FastAPI + RAG + Plugins and MCP Protocol + Application Insights)

- Designed and implemented a multi-step agent using Microsoft Semantic Kernel that orchestrates multiple AI plugins to extract, refine, and enrich user queries with the help of MCP Protocol using Azure OpenAI.

- Integrated a CV Skill Extraction Plugin that uses a RAG-powered Azure Function to parse user resumes and extract technical and domain-specific skills

- Developed a QueryRefinerPlugin using LLMs to clean, rephrase, and restructure user queries, improving downstream plugin accuracy and LLM understanding.

- Integrated external web search capabilities via the Tavily API to fetch real-time learning resources, improvements related to user skill gaps.

- Implemented Redis caching mechanism at the agent level to avoid repeated calls for the same questions, reducing token usage and API latency.

- Instrumented OpenTelemetry-based observability to trace token usage, model latency, and Azure OpenAI cost metrics across plugin calls and agent executions.

- Orchestrated plugin-based flow that chains refine → retrieve → search operations, returning structured responses like:

- Developed a FastAPI endpoint (/api/agtchat) to serve frontend interfaces like React Js, enabling real-time interaction with the assistant.

Technical Stack

Tools and Technologies

Professional Experience

AI Engineer

Feb'22 - Mar2025

Elastacloud Limited, UK

- Intelligent Document Understanding & Conversational AI Platform

- Built an intelligent document pipeline using Azure Form Recognizer for OCR and schema extraction, integrated into a structured document store.

- Implemented RAG pipelines for context-aware answering, using LangChain and Semantic Kernel for multi-step reasoning and memory integration.

- Developed and deployed a FastAPI-based backend with Azure Functions for orchestration and summarization tasks

- DIntegrated LLM observability features (latency, response scoring, success/failure rates) using Azure Monitor and App Insights

- Deployed solution in a secure, scalable Azure environment with role-based access and integration into enterprise authentication

- Achieved 40%+ improvement in document retrieval accuracy by optimizing chunking, embedding models, and vector search configuration

- Optimised latency and throughput of AI Models for stable AI application

- Outcome: Enabled users to quickly understand and extract insights from complex business documents, significantly reducing manual review time and improving knowledge accessibility across the organization.

Technical Skills

Tools and Technology

AI/Data Engineer

Jun'21 - Jan'22

BP(British Petroleum), UK

Cloud-Native Lakehouse & ML-Driven Analytics Platform

- Architected and built a modern Lakehouse solution on Azure using Databricks with Delta Lake for bronze/silver/gold data layering, supporting real-time and batch analytics pipelines.

- Automated ELT/ETL pipelines using Azure Data Factory, Azure Stream Analytics, and EventHub, enabling ingestion and processing of large-scale streaming security logs.

- Developed ML-enabled pipelines in Databricks notebooks for pattern detection, data profiling, and tag-matching—validated with 90%+ accuracy against benchmark datasets.

- Integrated Spark-based data quality and profiling workflows using PySpark, improving downstream ML readiness and reducing data noise.

- Collaborated with the AWS data team to support Data Mesh architecture using Lambda, S3, SQS, and DynamoDB for hybrid cloud processing and governance.

- Enabled downstream integration with Azure Sentinel for faster threat detection and alerting by transforming and standardizing high-volume log data.

- Outcome: Delivered BP’s first successful cloud-native data lake for SDS, reducing processing latency by 35% and enabling faster, more accurate cybersecurity response workflows across the organization.

Technical Skills

Tools and Technologies

Lead Consultant

Dec'18 - May'20

Genpact, India

Risk Management Platform Modernization

- Refactored and migrated monolithic risk management modules into modular Spring Boot microservices, reducing legacy dependencies and improving scalability

- Implemented Spring Batch workflows to manage large-scale data processing and schema migration, enabling seamless upgrades without data loss.

- Developed secure and efficient RESTful APIs to support inter-service communication and external system integration, resulting in a 30% performance improvement.

- Played a key role in code reviews, technical design discussions, and onboarding junior developers as part of the agile delivery team.

- Supported continuous deployment and testing through integration with CI/CD pipelines, ensuring consistent delivery in a regulated enterprise environment.

Technical Skills

Tools and Technologies

Senior Software Engineer

Jun'12 - Oct'18

RCS Tech, India

Enterprise Asset Management & Claims Processing Systems

- Designed and implemented core modules for an enterprise asset management system, deployed across GE and EDS, improving asset tracking and maintenance operations.

- Enhanced LDAP authentication and Single Sign-On (SSO) integration for enterprise applications, streamlining access management and user security.

- Developed and maintained Java-based web services to automate processes such as healthcare claims processing and employee tracking for Vidal Healthcare and HGS.

- Optimized backend performance by refactoring legacy code and improving database access layers using JDBC and Oracle stored procedures.

- Worked closely with client-side teams during on-site deployments and provided ongoing support to ensure smooth adoption and performance tuning.

- Outcome: Successfully improved platform responsiveness and maintainability while reducing technical debt. Delivered a resilient architecture that enabled

- Outcome: Delivered stable and maintainable software solutions that improved operational efficiency and security. Played a key role in long-term client engagements through strong technical delivery and consistent support.

Technical Skills

Tools and Technologies

Professional Courses

Machine Learning Professional (In-Progress)

Databricks

AI-102 AI Engineer Associate

AZ-900 Azure Fundamentals

Microsoft

Prompt Engineering

ITIL Foundation

Coursera

Education

Master of Science - Data Science and Computational Intelligence

Coventry University, Coventry, UK

Bachelor of Technology - Information Technology

Anna University, Tiruchy, India

Exploring Topics

Knowledge Graph(RAG)

Azure, AWS

Neo4J

AWS, Azure